- Computers

- Servers

- NVIDIA Jetson

- Mainboards

- System On Module

- AI Camera

- Tablets

- Development Kits

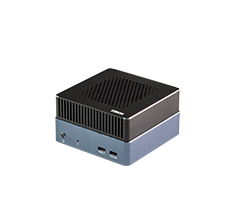

EC-ThorT5000

Edge Computing

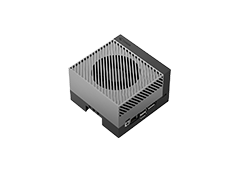

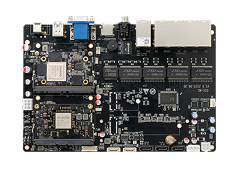

CSR2-N72R3399

ARM Cluster Servers

EC-ThorT5000

Edge Computing

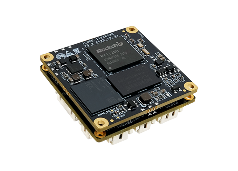

CORE-8550JD4

AI Compute Modules

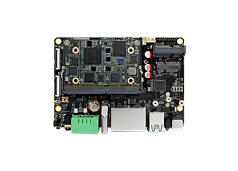

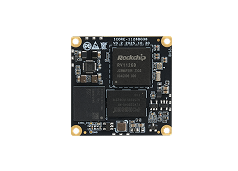

ICORE-1126BQ38

Commercial Modules

ICORE-1126BJQ38

Industrial Modules

CT36L/CT36B

AI Camera

Accessories

- Heat Sink

- GPS Module

- SATA Adapter

- POE Module

- 4G Module Kit- EG25-G

- RM500Q-GL 5G M.2 Module

- Microphone Array

- HDMI-TO-MIPI-CSI Drive Board

- DM-M10R800 V2

- AR0230 200W Camera

- CAM-2MS2MF 200W

- 800W Monocular Camera

- CAM-8MS1M Monocular Camera

- USB to UART Module

- Kingston M.2 2242 SSD

- Firefly 10.1-inch monitor

- DM-M10R800 V3S

- 10.1'' Portable Monitor

- MicroUSB Data Cable

RK182X Development Kit

Servers series

AI Servers

ARM Cluster Servers

Gateway Servers

NVIDIA Jetson Orin Series

EC-ThorT5000

Edge Computing

Customization

AI

Use PaddlePaddle FastDeploy for AI Deployment

C40PL for License Plate Recognition Application

RK3568 Industrial Tablet Face Recognition

Multi-Channel Face Recognition

Chain Store Customer Flow Statistics

Network Call Solution

Face Recognition Precision Marketing

Human Body Feature Point Detection

RK3399 Echo Cancellation

Face Recognition All-in-One PC Solution

NPU Integrated Computing

BMC (Baseboard Management Controller)

Intelligent Hardware

RK3399 4G Voice Call and SMS Function

iHC-3308GW Alibaba Cloud IoT Cloud Deployment

iHC-3308GW Compatible with OpenWrt 21.02

Debian10 for RV1126

Smart Gateway

Cluster Server

Three Displays with Different Output and Touch Control

RK3588 AVS Panoramic Stitching

RK3588 Multi-Display Splicing

RK3588 Multiple Displays With Different Outputs

Virtual Hardware Technology

Container Virtualization for Android

Booting Systems from Multiple Storage Devices

Firefly Remote Mount System

Smart IoT Development Kit — Network Call

More